Does pretraining on a medical text corpus provide an edge when answering COVID related questions?

Problem Statement

We are planning to utilize BERT to build a Question Answering model trained to answer questions from COVID related research articles. Question-Answering models are Natural Language Processing Models that can answer questions given some context, and sometimes without any context. Based on how the answer is generated, the task can be classified either as Extractive Question Answering or Generative Question Answering. In Extractive Question Answering, the model extracts the answer from a context and provides it directly to the user. It is usually built with BERT-like models [1].

Lee et. al [2] introduced the BioBERT model where they have adapted the pre-trained language model BERT for biomedical corpora from PubMed and PMC. We are planning to build a QA model utilizing the BioBERT model to explore if pretraining on a medical text corpus provides an edge when answering COVID related questions.

Related Work

BERT (Bidirectional Encoder Representations from Transformers) [3] is based on Transformers, trained using the Transformer Encoder architecture, with Masked Language Modelling (MLM) and the Next Sentence Prediction (NSP) pre-training objective. It is designed to pre-train deep bidirectional representations from an unlabeled text by jointly conditioning on both the left and right contexts. The pre-trained BERT model can be fine-tuned with an additional output layer to create models for a wide range of NLP tasks.

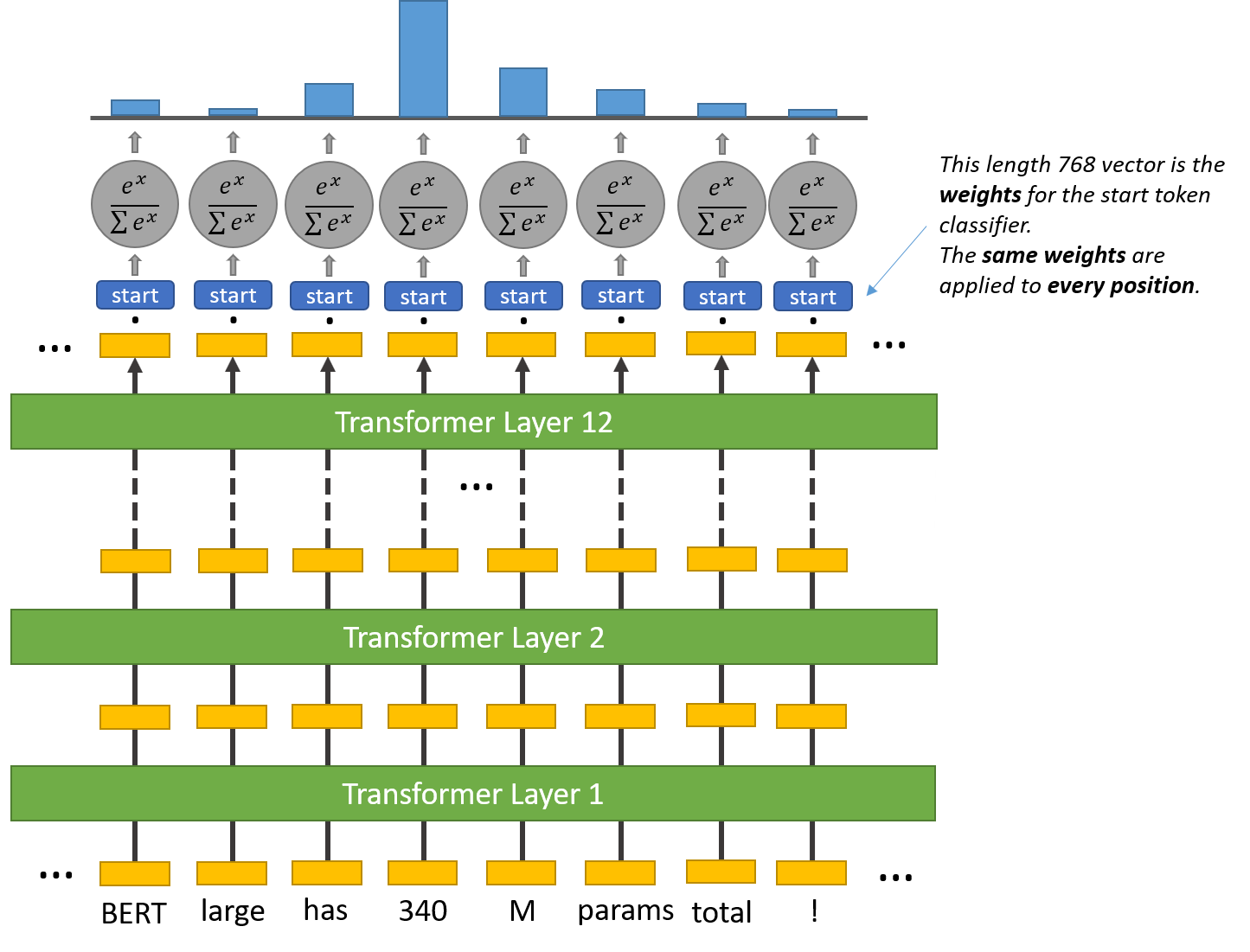

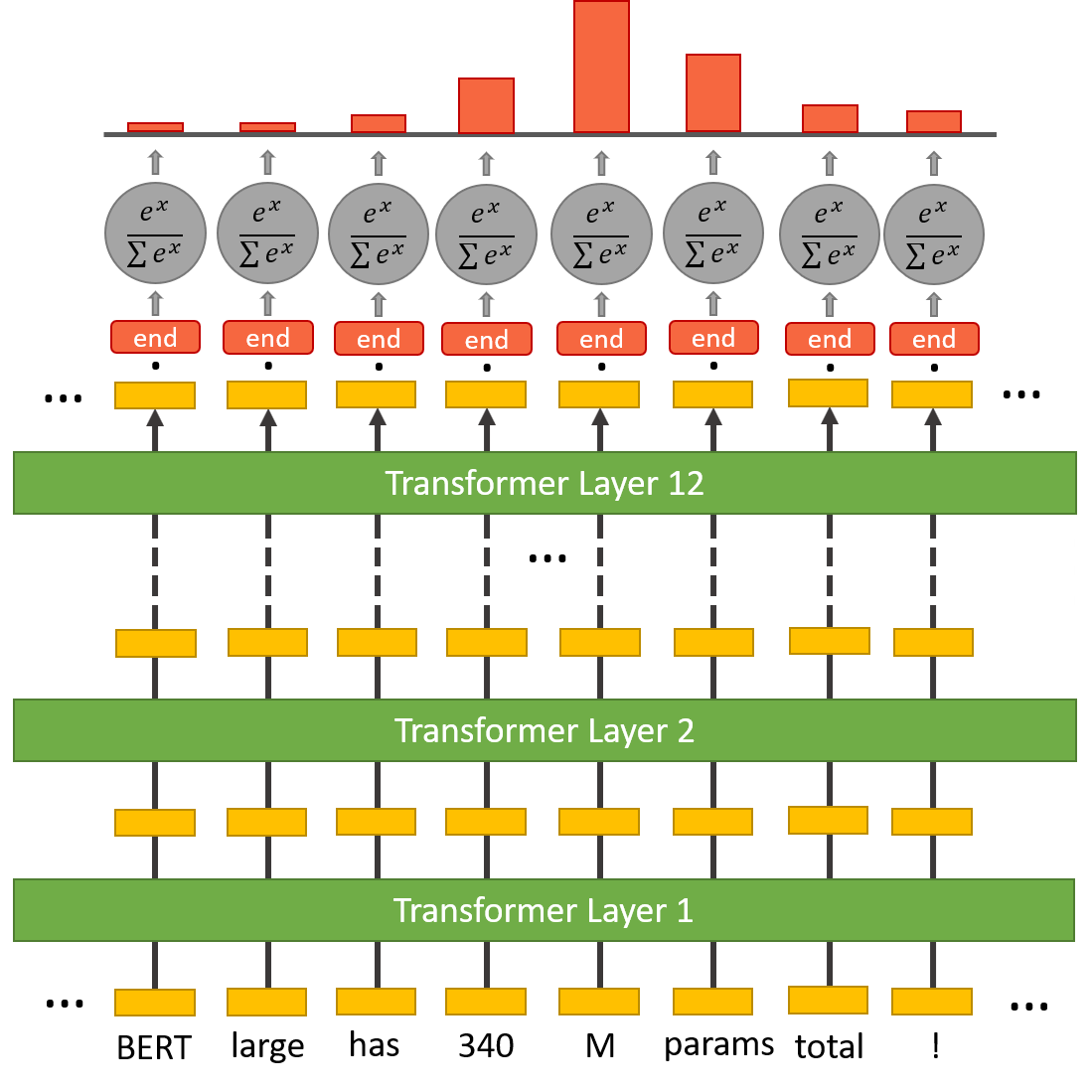

To perform the QA task we need to add a question-answering head on top of the BERT model to find the start token and end token of an answer for a given paragraph. Inside the question answering head there are two sets of weights, one for the start token and another for the end token. They have the same dimensions as the output embeddings.

The output embedding of every token in the text is fed into this head. A dot product is computed between the start weights and the output embeddings. Then the softmax activation is applied to produce a probability distribution over all of the words.The word with the highest probability is picked as the start token. The same process is repeated for the end token.

|

|

Dataset

The Question Answering model requires data in the SQuAD (Stanford Question Answering Dataset) format. SQuAD is a reading comprehension dataset, consisting of questions posed by crowdworkers on a set of Wikipedia articles, where the answer to every question is a segment of text from the corresponding reading passage, or the question might be unanswerable.

For our project, we plan to use the COVID-QA [4] dataset built using the CORD-19 [5] dataset. The dataset consists of a collection of work of several Researchers and its analysis. It consists of around 59 thousand papers and around 41 thousand full texts incorporating papers distributed in more than 3200 journals. The COVID-QA dataset has 147 scientific articles mostly related to COVID-19 selecetd from CORD-19 and annotated by experts in SQuAD style fashion where annotators marked text as answers and formulate corresponding questions.

Some example questions-answers in the dataset:

Question: What is the main cause of HIV-1 infection in children?

Answer: Mother-to-child transmission (MTCT) is the main cause of HIV-1 infection in children worldwide

Question: What plays the crucial role in the Mother to Child Transmission of HIV-1 and what increases the risk

Answer: DC-SIGNR plays a crucial role in MTCT of HIV-1 and that impaired placental DC-SIGNR expression increases risk of transmission

Plan

We are planning to categorize our dataset based on topics in the text, question semantics etc. We would train both the models by holding off one of the categories.

Case 1: We would hold one of the topics out while training the dataset and test with all the topics to compare the model performances on this unseen topic.

Case 2: We would hold one of question types out while training the dataset and test with all the question types to compare the model performances on this unseen question type.

One of our hypotheses is that the BioBert model would perform better than the other model in case 1. With these experiments we would visualize the performance of the BERT models in the Question-Answering task and also explore how impactful is pre-training with biomedical corpora.

References

[1] https://huggingface.co/tasks/question-answering

[2] BioBERT: a pre-trained biomedical language representation model for biomedical text mining

[3] BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

[4] COVID-QA: A Question Answering Dataset for COVID-19

[5] The COVID-19 Open Research Dataset (CORD-19)